The Human Element: Redefining Innovation in the Age of Technology

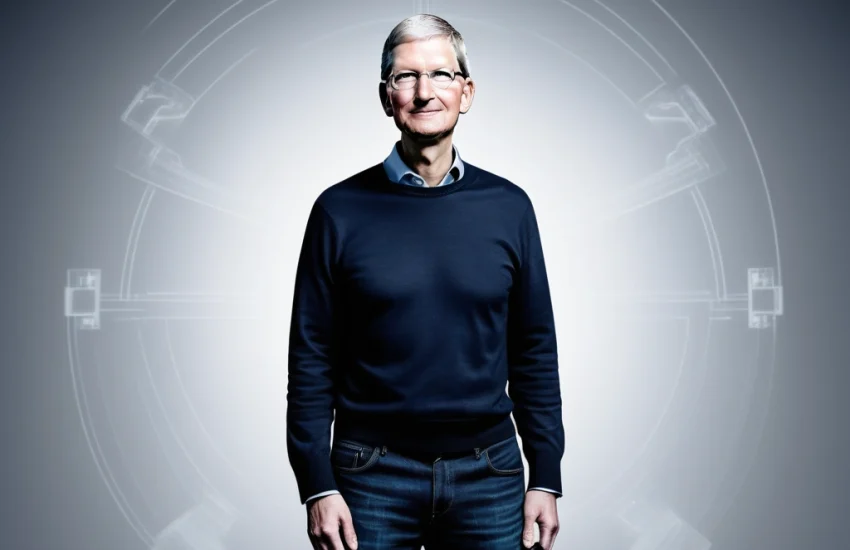

In a world increasingly shaped by technological advancements, Apple CEO Tim Cook’s assertion, “Technology without humanity is just complexity – true innovation enhances our shared human experience,” serves as a powerful and necessary reminder of the ethical considerations that must underpin all technological endeavors. This statement is not merely a philosophical musing but a practical guidepost for navigating the complex landscape of modern technology. It underscores the critical need for human-centered design, where the focus shifts from technological capability to human well-being and societal benefit.

This article delves into Cook’s philosophy, exploring the importance of prioritizing ethical innovation and the potential consequences of neglecting responsible technology in the face of emerging technologies like artificial intelligence and automation. The pursuit of technological advancement must be tempered by a profound understanding of its ethical implications, ensuring that progress serves humanity rather than the other way around. Tim Cook’s perspective on ethical innovation challenges the traditional view that technological progress is inherently beneficial.

He advocates for a more nuanced approach, emphasizing that true innovation must be measured not just by its technical prowess but by its positive impact on society. This involves a deliberate and thoughtful design process that considers the needs and values of the people who will use the technology. For instance, Apple’s focus on user privacy and accessibility features demonstrates a commitment to human-centered design, reflecting a belief that technology should empower individuals while respecting their fundamental rights.

This approach is a stark contrast to the often-seen rush to market without adequate consideration for potential negative societal impact, highlighting the leadership role in championing ethical technology. The concept of human-centered design extends beyond mere usability; it encompasses a broader understanding of the societal impact of technology. This requires a deep engagement with ethical considerations across all stages of technological development. Take, for example, the development of AI algorithms. Without careful attention to data bias and algorithmic transparency, these systems can perpetuate and even amplify existing societal inequalities.

This is not just a technical challenge; it is a profound ethical imperative. Responsible technology requires that we proactively address these issues, ensuring that AI systems are fair, accountable, and beneficial to all members of society. This also includes considering the environmental impact of technology, promoting sustainable technology practices that minimize harm and contribute to a healthier planet. Furthermore, the leadership required to drive ethical innovation must come from the top down. Leaders must champion a culture of digital ethics within their organizations, embedding ethical considerations into the very fabric of the innovation process.

This involves fostering open dialogue, encouraging critical thinking, and establishing clear ethical guidelines that govern the development and deployment of technology. It also means being willing to prioritize ethical concerns over short-term gains, a commitment that requires courage and a long-term vision. For example, companies like Patagonia, known for their commitment to sustainability, demonstrate that ethical practices can not only be good for society but also good for business. This highlights the potential of responsible technology to drive both social and economic progress.

In conclusion, Tim Cook’s philosophy serves as a critical framework for navigating the complex ethical landscape of modern technology. By emphasizing human-centered design and responsible technology, we can ensure that innovation serves to enhance our shared human experience, rather than undermining it. This requires a collective commitment to ethical innovation, with leaders at the forefront, championing a culture of digital ethics and promoting the development of technologies that are not only powerful but also just and sustainable. Ultimately, the future of technology depends on our ability to prioritize human values and societal well-being, ensuring that technological progress is a force for good in the world.

Tim Cook’s Vision: Human-Centered Technology

Tim Cook’s vision transcends the mere creation of sophisticated technology; it centers on a human-centric approach where innovation serves as a catalyst for enriching human lives. He challenges the prevailing Silicon Valley dogma that technological advancement is an end in itself, instead positing that true innovation must prioritize human well-being and societal benefit. This philosophy, deeply embedded in Apple’s corporate culture, manifests in products designed for accessibility, privacy features that empower users, and a commitment to sustainable practices.

Cook’s leadership in this arena sets a powerful example for the tech industry, demonstrating that ethical considerations are not merely a constraint but a driving force for meaningful innovation. This human-centered approach is evident in Apple’s focus on accessibility features. From VoiceOver to Switch Control, these integrated tools empower individuals with disabilities to engage fully with technology, fostering inclusivity and breaking down barriers. This commitment goes beyond mere compliance; it reflects a deep understanding of technology’s potential to enhance human lives across the spectrum of human experience.

By prioritizing accessibility, Cook demonstrates that ethical innovation is not just good business; it’s a fundamental human imperative. Furthermore, Cook’s emphasis on data privacy underscores his commitment to human-centered design. In an era of rampant data collection and surveillance, Apple has taken a strong stance on protecting user information, championing encryption and advocating for stricter privacy regulations. This commitment to user privacy is not just a marketing tactic; it’s a core value that resonates deeply with customers who are increasingly concerned about the ethical implications of data exploitation.

Cook’s leadership in this area has set a new standard for the industry, demonstrating that responsible technology can be both innovative and privacy-preserving. Beyond product development, Cook’s leadership extends to advocating for societal good. He has been a vocal proponent of environmental sustainability, leading Apple’s efforts to transition to renewable energy and reduce its carbon footprint. This commitment to environmental responsibility reflects a broader understanding of technology’s impact on the planet and the need for sustainable practices.

By prioritizing sustainability, Cook demonstrates that ethical innovation encompasses not only human well-being but also the health of our planet. His actions underscore the interconnectedness of technology, society, and the environment. Cook’s vision of human-centered technology extends to fostering a culture of ethical innovation within Apple. He encourages employees to consider the societal impact of their work, promoting open dialogue about ethical dilemmas and fostering a sense of responsibility. This emphasis on ethical considerations is not simply a matter of compliance; it’s a core value that permeates the organization, shaping product development, business practices, and corporate social responsibility initiatives. By cultivating a culture of ethical innovation, Cook ensures that Apple’s technological advancements contribute to a more just, equitable, and sustainable future.

Navigating the Ethical Landscape of Artificial Intelligence

The rapid advancement of artificial intelligence presents a dual narrative of immense potential and profound ethical challenges, demanding a nuanced approach that prioritizes responsible technology development. AI algorithms, while capable of processing vast datasets to identify patterns and make predictions, are not inherently neutral. They learn from the data they are fed, and if that data reflects existing societal biases, the algorithms can inadvertently perpetuate and even amplify these biases, leading to discriminatory outcomes in areas ranging from loan applications to criminal justice.

This underscores the critical need for ongoing evaluation and mitigation strategies to ensure fairness, transparency, and accountability in AI systems, a cornerstone of ethical innovation. The imperative is not merely to build powerful AI but to build AI that is just and equitable. One of the foremost ethical concerns surrounding AI is the lack of transparency in how many algorithms make decisions. These complex systems, often referred to as ‘black boxes,’ can be difficult to understand, making it challenging to identify and correct biases or errors.

This opacity undermines trust and accountability, especially when AI is used in high-stakes decision-making processes. For example, facial recognition systems, often trained on datasets that underrepresent certain demographic groups, have been shown to exhibit significantly higher error rates for people of color, raising serious questions about their deployment in law enforcement and other sensitive areas. Addressing this challenge requires a concerted effort to develop explainable AI (XAI) techniques that can provide insights into the decision-making processes of these algorithms.

Further complicating the ethical landscape of AI is the question of responsibility when things go wrong. If an autonomous vehicle causes an accident, who is held accountable: the programmer, the manufacturer, or the user? This lack of clear lines of responsibility creates legal and ethical gray areas that must be addressed proactively. As AI becomes increasingly integrated into our daily lives, it is crucial to develop regulatory frameworks that establish clear guidelines for the development and deployment of AI systems.

These frameworks should prioritize human-centered design, placing human well-being and societal benefit at the core of AI development. Tim Cook, along with other tech leaders, has repeatedly emphasized that technology ethics must guide AI development to avoid unintended negative consequences. The societal impact of AI also extends to the potential for job displacement as automation becomes more widespread. While AI-driven automation can increase efficiency and productivity, it also raises concerns about the future of work, particularly for those in routine or manual labor.

This necessitates proactive strategies for reskilling and upskilling the workforce to prepare individuals for the jobs of the future, as well as a broader societal conversation about how to ensure that the benefits of AI are shared equitably. Sustainable technology practices must include a focus on human capital and creating opportunities for all. Moreover, organizations must take leadership in fostering a culture of digital ethics, where employees are encouraged to critically assess the ethical implications of their work and prioritize human values.

In light of these complex challenges, it is evident that navigating the ethical landscape of AI requires a multi-faceted approach that involves collaboration between technologists, policymakers, ethicists, and the broader public. The development of responsible technology is not just a technical challenge but a societal one, demanding that we prioritize ethical considerations at every stage of the innovation process. Ultimately, the goal should be to harness the power of AI to enhance the human experience, not to diminish it. True progress is not simply about technological advancement, but about ensuring that such advancements align with our shared values and contribute to a more just and equitable world.

Automation and the Future of Work: Balancing Progress and Human Capital

Automation, while heralding unprecedented gains in efficiency and productivity, presents a complex web of ethical and societal challenges, most notably the displacement of workers and the exacerbation of economic inequality. The integration of advanced technologies, such as AI-driven robotic systems and sophisticated algorithms, into various sectors is rapidly transforming the labor landscape. This shift demands a proactive approach from both businesses and policymakers to not only harness the benefits of automation but also mitigate its potential adverse consequences, ensuring that technological progress serves the broader interests of society.

For example, the manufacturing sector has seen a significant reduction in manual labor due to robotic automation, requiring a re-evaluation of workforce needs and skill sets. This transition necessitates an ethical framework that prioritizes the well-being of those impacted by these changes. Tim Cook’s philosophy of human-centered design becomes particularly relevant when considering the implications of automation. It calls for a responsible technology approach, where the focus is not solely on maximizing output but also on ensuring that technological advancements contribute to a more inclusive and equitable society.

This involves not only considering the immediate economic impacts but also the long-term effects on social structures and individual well-being. For instance, companies should invest in retraining programs that equip employees with the skills needed to navigate the changing job market, moving from repetitive manual tasks to roles that require critical thinking and problem-solving skills. This requires a collaborative effort between industry, educational institutions, and government agencies to ensure that workers are not left behind in the era of automation.

This approach aligns with the broader concept of sustainable technology, where innovation is guided by ethical considerations and a commitment to social responsibility. The ethical dimension of automation extends beyond job displacement to include considerations of algorithmic bias and fairness. AI-driven automation systems, if not carefully designed and monitored, can perpetuate and even amplify existing societal biases, leading to discriminatory outcomes in hiring, promotion, and other critical areas of employment. Addressing these biases requires a commitment to transparency and accountability in the development and deployment of AI systems.

This includes implementing rigorous testing protocols, ensuring that algorithms are free from bias, and establishing mechanisms for redress when errors occur. In this context, the principles of responsible technology and ethical innovation are paramount, ensuring that automation serves as a force for positive social change, rather than a driver of inequality. The focus on societal impact must be central to any technological advancement. Leadership plays a crucial role in shaping how organizations navigate the challenges of automation.

Leaders must champion a culture of ethical innovation, where the societal implications of technological decisions are given due consideration. This includes investing in research to understand the long-term effects of automation, engaging in open dialogue with employees and stakeholders, and implementing strategies to mitigate potential negative impacts. Companies should not view automation solely through the lens of cost reduction and increased profits but rather as an opportunity to create a more productive and fulfilling work environment for all.

This can be achieved by focusing on re-skilling and up-skilling programs, as well as by creating new roles that leverage human skills alongside automation. Ultimately, the responsible implementation of automation requires a commitment from leaders to prioritize human values and societal good. Furthermore, the discussion around automation must also include a focus on data privacy and digital ethics. As automation systems become increasingly sophisticated, they rely on vast quantities of data, including personal information. Ensuring the security and ethical use of this data is paramount.

Companies must be transparent about their data collection practices and provide individuals with control over their own data. This includes establishing robust data governance frameworks and adhering to the highest standards of data privacy. By prioritizing data privacy, businesses can build trust with their employees and customers, fostering a more responsible and sustainable approach to technological innovation. This holistic approach, encompassing technology, ethics, innovation, leadership, and society, is critical for navigating the complex landscape of automation and ensuring that it serves as a catalyst for progress, not a driver of division.

Data Privacy in the Digital Age: Safeguarding Individual Rights

The increasing reliance on data-driven technologies necessitates a robust framework for data privacy, moving beyond mere compliance to embrace a culture of responsible data handling. Protecting sensitive personal information is not only a legal imperative, mandated by regulations like GDPR and CCPA, but also a fundamental ethical responsibility. Businesses must prioritize data security and transparency, not as a cost center but as a core value, empowering individuals with genuine control over their digital footprint. This requires moving away from opaque data practices and towards clear, understandable privacy policies that allow users to make informed decisions about their data.

The concept of ‘privacy by design’ should be embedded into the development process of all technologies, ensuring that data protection is a primary consideration from the outset. Tim Cook, a vocal advocate for data privacy, has consistently emphasized that technology should serve humanity, not exploit it. This perspective underscores the significance of ethical innovation in the digital age. For example, Apple’s approach to user privacy, such as its app tracking transparency feature, demonstrates a commitment to empowering users, even when it may impact its own business model.

This contrasts with other tech companies that have faced criticism for their data collection practices, highlighting the crucial difference between pursuing profit and prioritizing user rights. This commitment to data privacy is not just a matter of compliance but a reflection of a broader philosophy of responsible technology and human-centered design. The ethical challenges surrounding data privacy are further complicated by the rise of artificial intelligence (AI). AI algorithms often rely on vast amounts of personal data, raising concerns about bias, discrimination, and potential misuse.

For instance, facial recognition technology, trained on biased datasets, can lead to inaccurate and discriminatory outcomes, particularly for marginalized communities. Therefore, ensuring fairness, transparency, and accountability in AI systems is paramount. This involves not only developing robust privacy safeguards but also addressing the potential for algorithmic bias through careful data curation and ongoing monitoring. The development of AI ethics guidelines and frameworks is essential to ensure that these powerful technologies are used for the benefit of all, not just a select few.

Beyond compliance, data privacy should be viewed as a key component of building trust with users. Companies that prioritize transparency and empower individuals with control over their data are more likely to foster long-term relationships and build brand loyalty. This is especially true in an era where consumers are increasingly aware of the potential risks associated with data breaches and misuse. The implementation of privacy-enhancing technologies, such as differential privacy and federated learning, further demonstrates a commitment to protecting user data while still enabling valuable insights.

These technologies allow companies to analyze data in a way that minimizes the risk of exposing individual identities, showcasing how innovation can be used to bolster, rather than undermine, ethical data practices. Ultimately, embracing data privacy is not just an ethical imperative but a competitive advantage. The societal impact of data privacy extends beyond individual rights; it also has implications for democracy and social justice. The misuse of personal data can be used to manipulate public opinion, undermine democratic processes, and exacerbate existing inequalities.

Therefore, a robust framework for data privacy is essential for maintaining a healthy and equitable society. This requires not only responsible corporate behavior but also strong government oversight and public awareness. Individuals need to be educated about their rights and empowered to take control of their data. The pursuit of ethical innovation and responsible technology in data privacy is thus not just a technical challenge; it is a societal imperative that requires collective effort and a commitment to human-centered values.

Leading the Charge: Cultivating Ethical Innovation within Organizations

Building a culture of ethical innovation requires strong leadership that prioritizes human values and societal good. Leaders must cultivate an environment where ethical considerations are not merely an afterthought but are woven into the very fabric of the innovation process. This necessitates a fundamental shift in perspective, moving beyond a narrow focus on technological capabilities to a broader consideration of the societal impact of these advancements. Leaders like Tim Cook, who champion human-centered design, exemplify this approach, emphasizing the importance of aligning technological progress with human well-being.

This commitment must translate into concrete actions. Promoting open dialogue within organizations is crucial. Leaders should actively encourage critical thinking and constructive dissent, creating spaces where employees feel empowered to raise ethical concerns without fear of reprisal. Establishing clear ethical guidelines, informed by diverse perspectives and stakeholder input, provides a framework for responsible innovation. For example, Google’s AI Principles, which emphasize fairness, accountability, and transparency, demonstrate a commitment to embedding ethical considerations into AI development.

However, such guidelines must be living documents, constantly evolving to address the complex and dynamic nature of technological advancement. Furthermore, ethical innovation requires a proactive approach to anticipating and mitigating potential harms. This includes conducting thorough ethical impact assessments of new technologies, similar to environmental impact studies. By identifying potential risks early in the development process, organizations can implement safeguards and design solutions that minimize negative consequences. For instance, in the realm of data privacy, implementing privacy by design principles ensures that data protection is integrated into the very architecture of systems, rather than being an afterthought.

This proactive approach is essential for building trust and fostering responsible data practices. Investing in ethical training programs for employees is also critical. These programs should equip individuals with the knowledge and skills to navigate ethical dilemmas and make informed decisions. Case studies, simulations, and discussions of real-world scenarios can help employees develop a nuanced understanding of the ethical dimensions of their work. Moreover, organizations should establish mechanisms for reporting ethical violations and provide support for whistleblowers.

Creating a culture of accountability is paramount to ensuring that ethical principles are upheld in practice. Finally, ethical innovation necessitates a commitment to ongoing learning and adaptation. The technological landscape is constantly evolving, presenting new ethical challenges and opportunities. Leaders must foster a culture of continuous improvement, encouraging employees to stay abreast of emerging ethical issues and to engage in ongoing reflection and dialogue. By embracing a growth mindset and remaining open to new perspectives, organizations can navigate the complex ethical terrain of technological innovation and build a future where technology serves humanity, not the other way around. This resonates with Tim Cook’s vision of technology as a tool for empowerment, enabling individuals and communities to thrive in an increasingly interconnected world.

The Path Forward: Embracing Ethical Innovation for a Better Future

Ignoring ethical considerations in technological development can have far-reaching and detrimental consequences, exacerbating existing social inequalities and undermining human autonomy. The unchecked pursuit of technological advancement without a corresponding focus on ethical implications can lead to a future where technology serves to deepen societal divides rather than bridge them. For instance, algorithms designed without attention to bias can perpetuate discriminatory practices in areas like hiring, loan applications, and even criminal justice, disproportionately impacting marginalized communities.

A commitment to ethical innovation is not merely a matter of corporate social responsibility; it is essential for ensuring a sustainable and equitable future for all, a future where technology empowers individuals and strengthens the fabric of society. This necessitates a fundamental shift in how we approach innovation, moving beyond a purely profit-driven model to one that prioritizes human well-being and societal good. Tim Cook’s human-centric philosophy provides a compelling framework for navigating the complex ethical landscape of emerging technologies.

His emphasis on technology as a tool for enriching human experience, rather than an end in itself, underscores the critical importance of aligning technological advancements with human values. This approach requires a proactive and ongoing assessment of potential societal impacts, ensuring that innovation serves to enhance human capabilities and promote inclusivity. By prioritizing human-centered design and incorporating ethical considerations from the outset, we can harness the transformative power of technology to address pressing global challenges, from climate change to healthcare accessibility.

The development and deployment of artificial intelligence, in particular, demands careful ethical scrutiny. AI systems, trained on vast datasets often reflecting existing societal biases, can inadvertently perpetuate and amplify these biases, leading to discriminatory outcomes. Ensuring fairness, transparency, and accountability in AI algorithms is paramount. This involves implementing rigorous testing and auditing procedures, promoting diversity in the teams developing AI, and establishing clear ethical guidelines for AI deployment. Furthermore, ongoing public discourse and engagement are crucial for shaping the future of AI in a way that aligns with democratic values and protects human rights.

Data privacy in the digital age represents another critical ethical challenge. As our lives become increasingly intertwined with data-driven technologies, protecting sensitive personal information is not just a legal imperative, but a fundamental ethical responsibility. Businesses and organizations must prioritize data security and transparency, empowering individuals with control over their own data and ensuring that data collection and usage practices adhere to the highest ethical standards. This includes implementing robust data protection measures, providing clear and accessible privacy policies, and fostering a culture of data responsibility within organizations.

Ultimately, cultivating a culture of ethical innovation requires strong leadership at all levels, from individual developers to CEOs like Tim Cook. Leaders must champion ethical considerations, not as an afterthought, but as an integral part of the innovation process. This involves fostering an environment where open dialogue, critical thinking, and ethical reflection are encouraged, and where ethical guidelines are not just written down, but actively implemented and enforced. By embracing ethical innovation as a core value, we can ensure that technology serves as a force for good, creating a more just, equitable, and sustainable future for all.